I’ve been listening to the opinions of various players in the IT market: large companies, tech giants, small businesses employing 1-5 people, recruiters, employees, subcontractors, freelancers, AI influencers, and trainers on AI and its impact on the job market. In my opinion, each of them is twisting reality to suit their own interests.

Input data

My opinion on this topic is unfortunately harsh on some parts of the market. To delve deeper into this topic, we need several perspectives and input data. Based on the following components, we will attempt to realistically assess the state of the market environment in which we find ourselves.

1. Employee Perspective (Freelancers, Specialists, Beginners)

2. Employer perspective (owners, shareholders, managers)

3. Software vendor perspective

5. My perspective and conclusions

Let’s go through the individual points, and at the end we will make a summary, which will also include my personal opinion about the market.

Employee Perspective

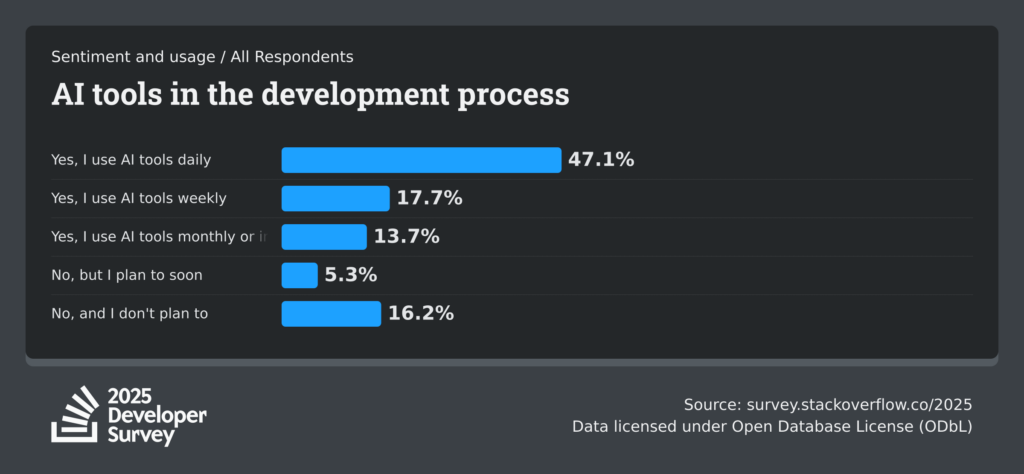

In 2025, StackOverflow conducted a survey. Approximately 20,000-60,000 IT professionals participated. The numbers vary depending on the question. The most interesting one in the context of our discussion is: https://survey.stackoverflow.co/2025/ai/. AI tools in the development process are used daily by 47%. Only 16% of all developers declare they do not plan to use AI in software development.

Tools in the development process

This is very interesting, but it paints a clear picture: approximately 84% of respondents have a positive attitude toward AI tools. However, “tools” is a very general term, encompassing chatting, vibe-coding, syntax suggestion, content generation, and so on. This isn’t strictly a software-related activity, but rather everyday use for various purposes.

Sentiment

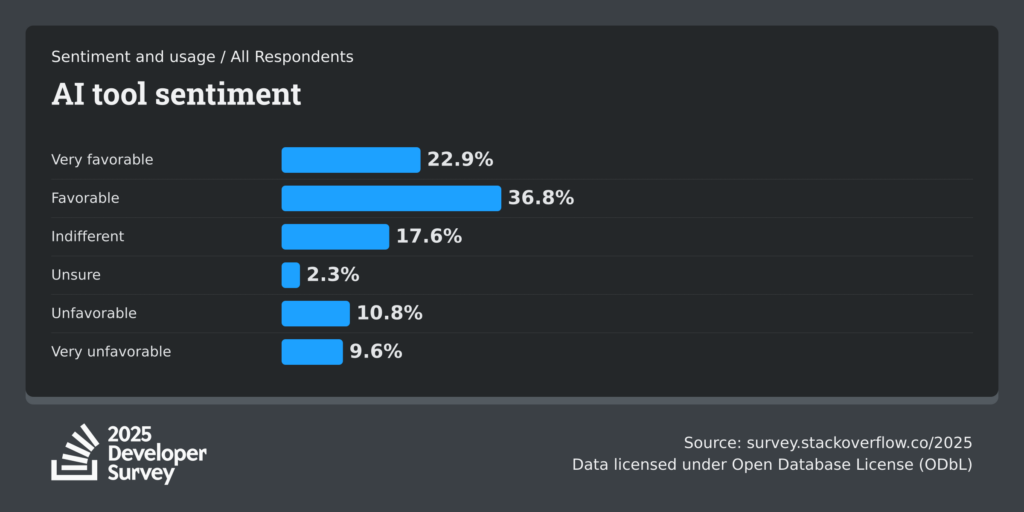

Another interesting trend is sentiment, which has fallen from 70% and above in 2023 and 2024 to around 60% today (late 2025). You can see the details below.

As we use tools more than in previous years, some of us are noticing imperfections or failures to meet our needs. The hype pervasive in the media, particularly on LinkedIn, builds high expectations for these tools. In practice, if we can’t “talk” to AI, we won’t be able to solve many problems.

Intended use

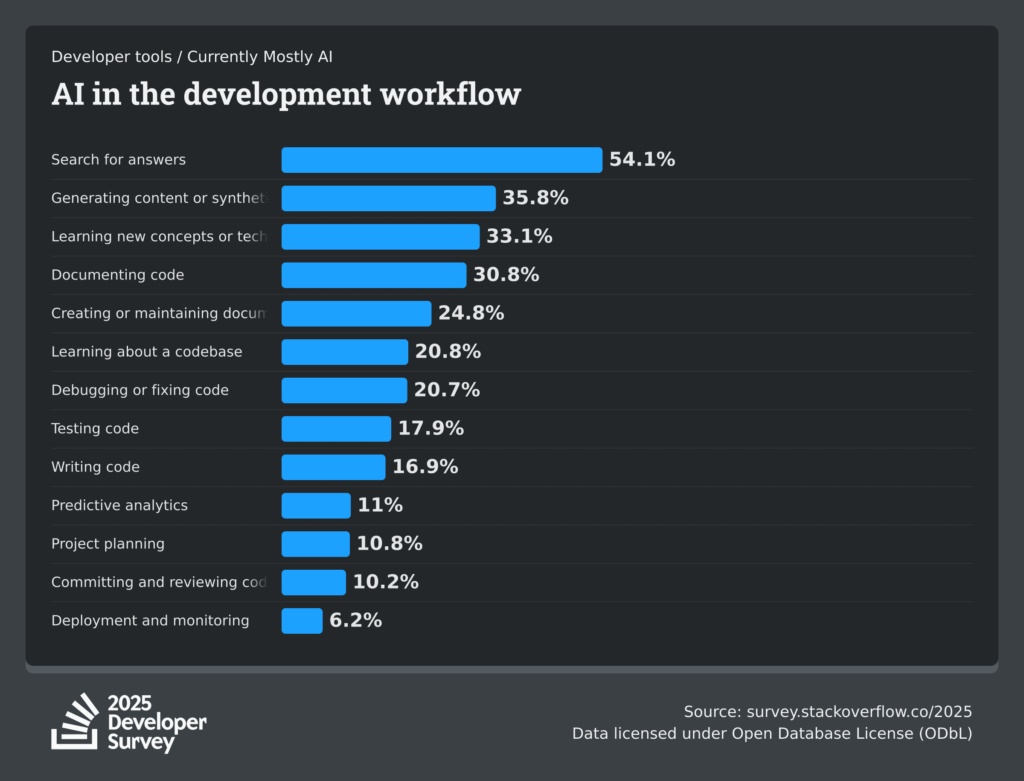

We see further interesting answers in the question: “Which parts of your development workflow are you currently integrating into AI or using AI tools to accomplish or plan to use AI to accomplish over the next 3 – 5 years? Please select one for each scenario.”

Writing code is definitely not the dominant reason for using AI. We mostly use models for content generation, research, and education. I personally ask a lot of questions in GPT chat; I’m able to learn specific topics in a short period of time. You no longer need to read an entire book or complete a 50-hour course to gain information. It’s a matter of asking the right questions and directing the conversation toward the goal of obtaining and visualizing the information you’re looking for.

Accuracy

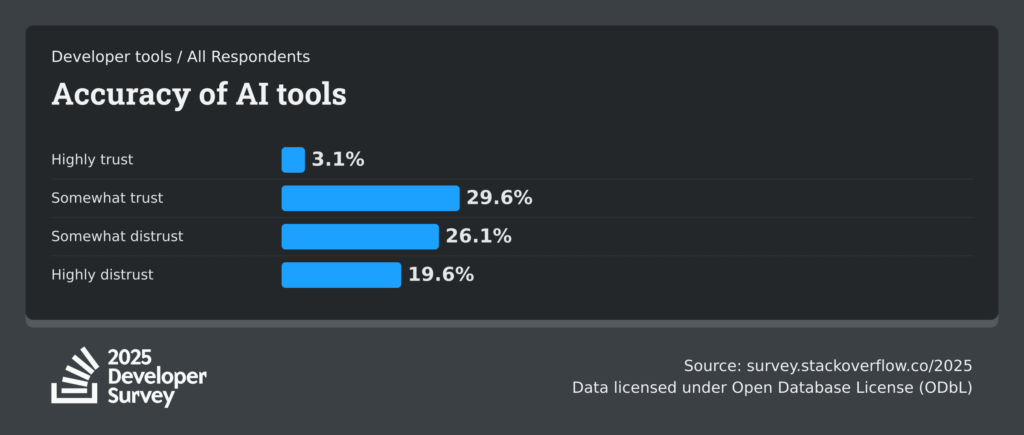

We’ll move on to the next section of the survey, LLM ACCURACY. The chart below shows that confidence in the accuracy of AI results leaves much to be desired. People are rather skeptical about this benchmark.

Moreover, almost half of the respondents expressed distrust. Frankly, I didn’t expect such negative responses. I myself notice distortions and hallucinations, but I wouldn’t say with a high degree of certainty that I distrust the answers the models generate. As the saying goes: trust, but verify!

Frustrations

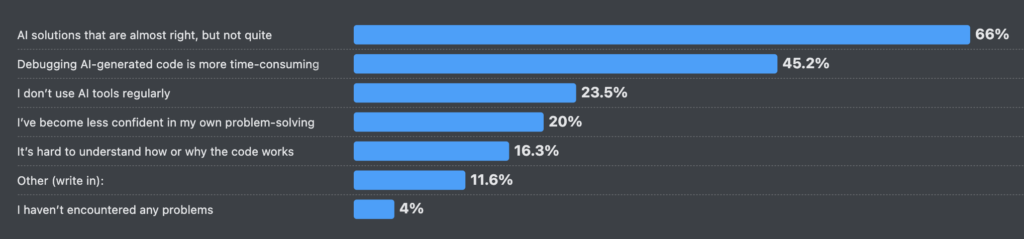

Following this negative trend discussed in the “Accuracy” chapter, let’s take a look at where AI causes frustration for users:

The biggest sources of frustration are:

- “AI solutions that are almost right, but not quite”

- “Debugging AI-generated code is more time-consuming”

Both reasons are understandable. We want our productivity to increase, which is why we reach for various tools. If we have to learn how to use agents, chats, or generators, we expect a return on this investment in the form of time saved later on performing our tasks. As we can see, people dissatisfied with working with AI don’t see a real impact on ROI. On a personal level, this leads to greater frustration, but for companies, it will have a real impact on finances and business efficiency.

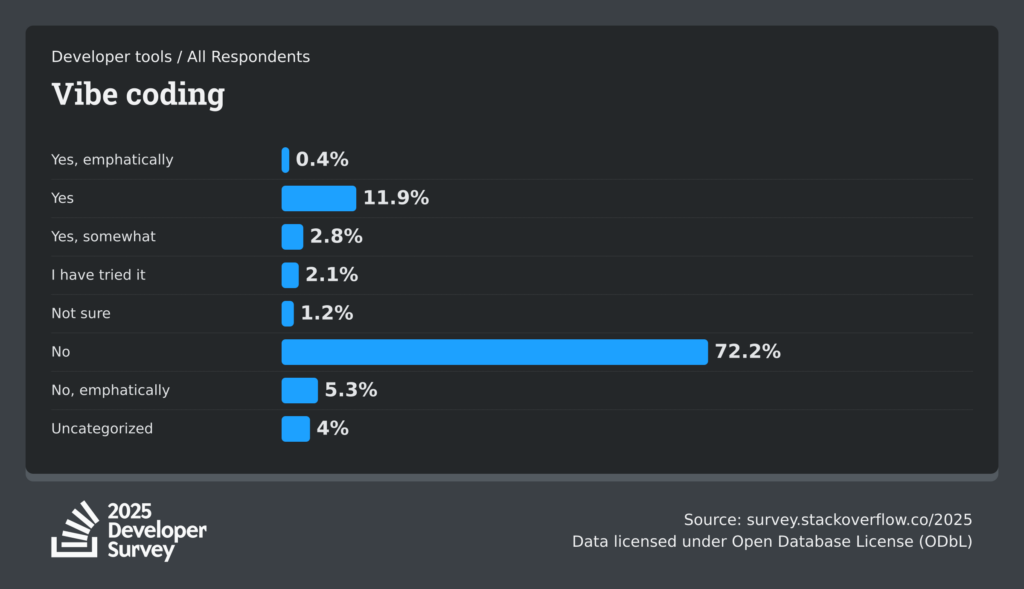

Vibe coding

Let’s start by explaining what vibe-coding actually is. In 2025, Karpathy coined the term “vibe-coding” to describe this method of collaboration with coding agents. This practice involves developing software without actually writing the code yourself, but simply communicating with an agent based on various LLM models. A survey shows that only 25% of respondents, out of over 20,000, use agents in this way.

This is also surprising. It suggests that the vibe-coding trend hasn’t yet developed enough to dominate programmers’ work methodology.

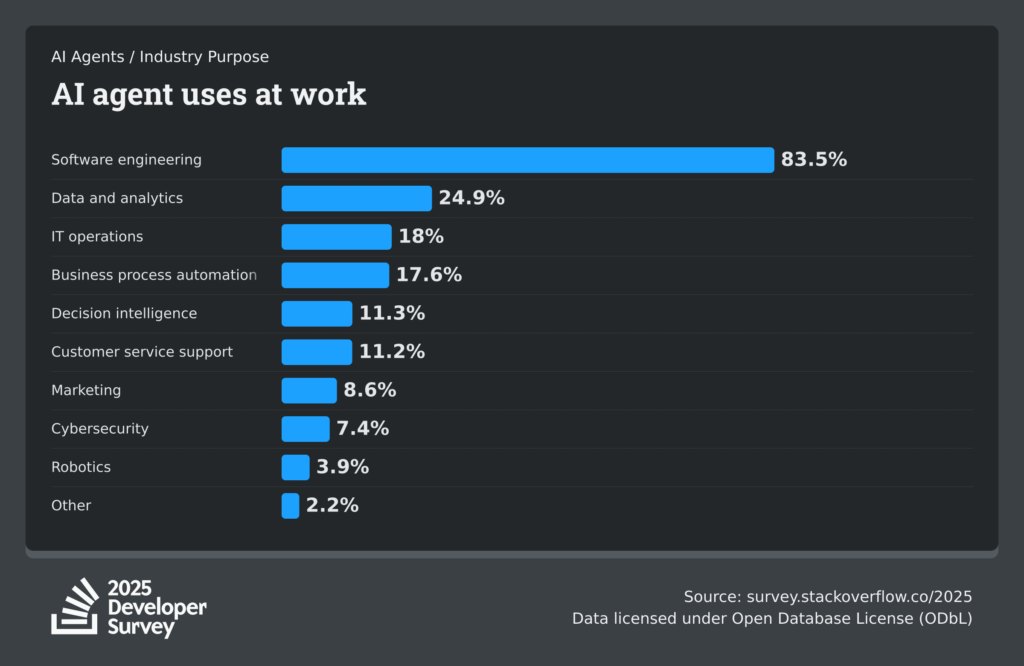

AI Agents Usage

The next question concerns the purpose for which AI agents are used. The vast majority (83.5%) use them for software engineering. This question may be somewhat biased given the characteristics of the respondents, as most of them work in this area. Nevertheless, we see a significant correlation between software development and the use of AI agents.

LLMs are very good at coding. This is because, as the name suggests, learning language, semantics, and syntax is, one might say, a narrow specialization of language models.

Perception of model intelligence

We often confuse the perception of a person and their intelligence with their language skills. In programming, writing code itself seems to be a problem solved by LLMs. However, this doesn’t mean that a programmer’s work ceases to be meaningful. On the contrary, we can now focus on things that LLMs aren’t best at, such as design, modeling, architecture, UX, and so on. The actual writing of code—the final phase of code production—can be safely delegated to agents, subject to certain rigors and constraints.

On the other hand, we see frustration and dissatisfaction with AI’s performance in other areas. Where we need high precision, freedom from hallucinations, and precise planning, AI still falls short, as expressed by a significant number of respondents.

My Subcontractor Perspective

From the perspective of a contractor or employee, I see the downsides of using AI. At the same time, I believe that in the coming years we will develop several frameworks for working with agents (Claude Code is already doing this, and so are competitors). The goal will be to minimize hallucinations and, in a sense, force agents to replicate our work or even produce higher-quality code.

I’m also trying to develop my own methodology and describe it in my repos, for example here. I don’t know if I’ll develop this idea intensively, but several such methodologies or frameworks have already been created, and I think it’s something we can’t avoid.

It’s impossible to manage LLMs without a specific methodology and documentation. By throwing in ad-hoc prompts, we expose ourselves to significant difficulties in understanding the code, debugging it, developing it, and performing code reviews. The market is already recognizing this, which is why there’s been a lot of talk lately about SDD (Spec Driven Development), where we provide relevant artifacts before assigning code writing. This is very similar to my CLAP methodology – I develop mine so that validation and bootstrapping of ideas are inexpensive and can be reduced to a few sessions with agents. However, that’s a topic for a separate post. I’ll try to describe it in the near future.

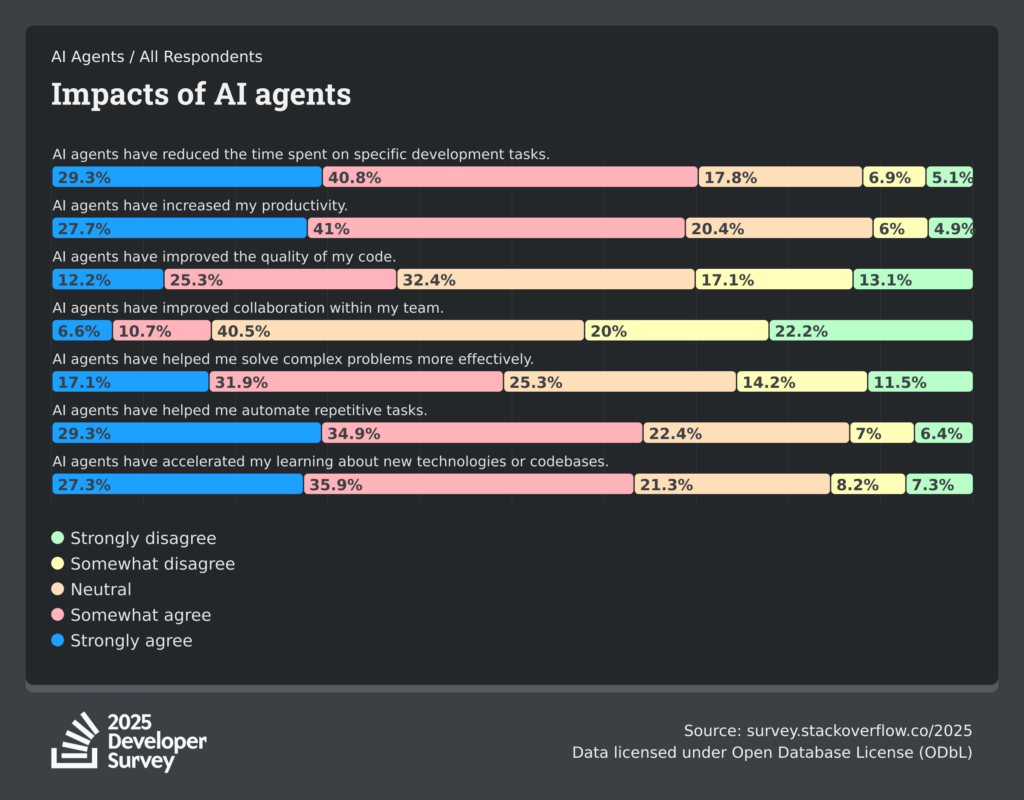

Key issue: real impact on efficiency

The final survey question will address the use of agents in work and their impact on efficiency. It’s easy to see that only in the areas of team communication/collaboration does the use of AI agents have a neutral-negative impact from a developer’s perspective.

The most important chart: “AI agents have increased my productivity” confirms that over 65% of respondents confirm that their productivity has increased. Despite mistakes and hallucinations, the process of writing code (tapping on the keyboard) itself takes a lot of time, and LLMs are excellent at it.

Previously, we used tools like the InteliSense IDE, and later language models to suggest syntax, complete lines of code, or autocomplete entire files. Today, we know that an LLM (specifically trained for coding) can write decent code faster than we, trained specialists, can produce it. However, writing itself is only a fraction of the entire process, and we must remember this. A good coder! = a good software engineer.

OpenAI and similar companies are indeed making statements (e.g., from Dario Amodei of Anthropic and Sam Altman of OpenAI) suggesting that AI will write more and more code and that the role of programmers will change. But this is more of a prediction than a declaration that “we don’t code anymore.” Internally, these companies employ thousands of engineers who write intensive code—but increasingly with the help of AI.

Employer’s perspective

I’ll briefly outline this chapter. It’s important to remember that hiring programmers over the past 20 years has been costly. It’s a simple matter of supply and demand. The infrastructure built during the dot-comm bubble in the 2000s has enabled us to digitize most of our lives, from health-related matters to financial management to childcare. As a result, the growing demand for people who “can talk to machines” has steadily increased, and the population hasn’t kept pace with labor production. I discussed this topic in the context of the Polish market here.

The difficulty of programming lies primarily in abstract thinking and recognizing patterns that should be applied. Software archetypes, design patterns, methodologies, and abstraction are also present. Added to this is the difficulty of finding a local development environment. AI is now solving all these problems, as it can understand code at a human-like level. But what impact does this have on employers?

It’s important to realize and understand that for most companies, programmers represent a significant expense. In companies where software is a significant part of the process, developers are the most expensive. Therefore, any savings without a reduction in quality seem tempting. Here’s some data from the largest technology companies:

Layoffs at Google and layoff plans at other technology companies indicate a trend: downsizing in software development. However, we can’t definitively determine whether AI contributed to this. Perhaps companies have overinvested in AI and LLM development, and their creation also requires engineers. Perhaps AI is facilitating downsizing due to increased productivity. We’ll have to wait a while for specific data.

Employers, however, often boast that they’ve reduced their workforce due to automation and AI. However, this isn’t happening in engineering departments. Customer service and departments with more repetitive work are most often the first to be cut. Consider Salesforce and Klarna, for example. In Klarna’s case, this move proved to be a mistake. We see that replacing an employee with an LLM is no easy feat.

A recent article from December 22, 2025, shows the impact of AI on the US labor market. . It also reports layoffs, primarily at Microsoft, where a significant portion of the workforce are programmers. However, this isn’t due to the automation of the software development process itself, but rather to a shift in the profile of the business from software development to creating AI-based tools (Machine Learning, Data Scientists, Mathematicians).

I haven’t seen a case study anywhere of software workers being replaced purely by AI, or more specifically, coding agents. LLMs remain merely tools in the hands of employees, not independent workers.

Summary of the employers perspective

From the employers’ perspective, it would be best to lay off all employees, paying only for electricity and tokens. However, no one has yet been able to prove that such an investment (yes, it’s massive change management, which is expensive and risky – that’s why I called it an investment) can pay off in a reasonable time. Laying off employees also means losing know-how and SOPs, which aren’t always documented. In fact, in most companies, this isn’t documented, and knowledge is dispersed among employees. Then there’s the interpersonal politics in corporations, where who you know matters more than what you know. All of this significantly complicates work parameterization, structuring, and, consequently, replacing building blocks (people) with other, less expensive ones.

Well, it’s unclear whether these new building blocks are cheaper, and secondly, whether they even meet the requirements currently placed on employees. Klarna learned this the hard way, laying off and then rehiring people. It’s not as simple as it might seem, despite employers playing a bit of a trickster, thus undermining programmers’ negotiating power (the shrinking market is a fact, but more on that shortly) during job interviews.

Demand will certainly change, but I wouldn’t say it has already happened. We’re also inundated with signals, and it’s very difficult to determine whether a company has actually reduced its workforce because it has AI or because it’s cash-strapped and needs to demonstrate to shareholders that it will maintain profitability. This is a very difficult issue. Furthermore, the labor market isn’t uniform. The workload is different in startups and small organizations, where testing AI as an employee has a greater impact on financial results and is also easier to implement. The situation is different in corporations, where laying off and replacing employees can be a process that’s not worth the gamble. I still see a lot of uncertainty and ambiguity in the market. We’ll have to wait another year or two before the bubble bursts and we’ll see what AI can actually offer and whether it’s suitable for implementation in place of programmers. The situation is similar in other professions. Only time will tell whether these are pipe dreams or a real substitute for humans.

Software vendor perspective

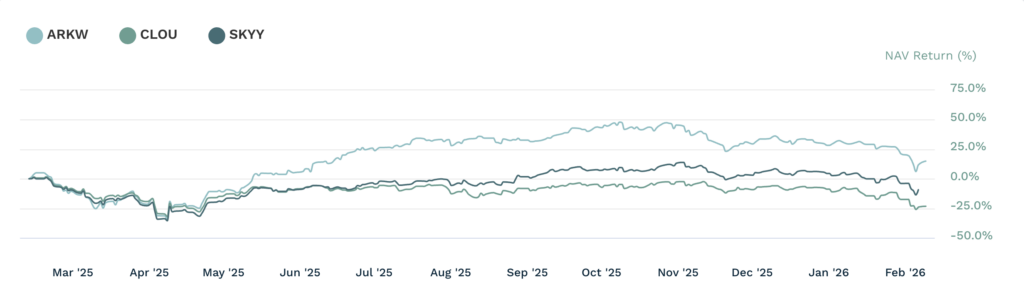

This topic is very broad; I’ll start by highlighting the sentiment in the SaaS market. Let’s take a look at the stock market and the shares of software companies today. I recommend reading the article discussing software vendors (especially SaaS) from the perspective of an investor looking at companies as a potential source of profit.

The tone is rather negative, but not unequivocal. This is a very interesting analysis. Let’s take a look at the charts:

The above ETFs focus on technology companies from the software sector. Most of them are SaaS or other software providers. The significant decline and return to pre-summer 2025 levels demonstrates decidedly negative investor sentiment. Investor opinions are important because they determine where the money will flow. Both individual and institutional investors are betting and hoping for profit. It seems they’re saying “I’m checking” today, expecting proof that SaaS still has a legitimacy in the markets. However, this doesn’t mean their concerns are justified. Software producers have a full range of opportunities to demonstrate the superiority of their solutions over homegrown, ad-hoc vibe-coded applications developed by in-house teams.

Być może scenariusz z SaaSami pójdzie w negatywną dla nich stronę. Ciężko jest to określić. Jedno jest pewne, AI będzie miało wpływ zarówno na same firmy jak i ich pracowników. Jeśli okaże się, że SaaSy nie są już potrzebne, wielu programistów i specjalziacji dookoła wytwarzania oprogramowania po prostu straci pracę. Pieniądze popłyną do vibe-coderskich narzędzi i providerów modeli.

Personally, I don’t pay much attention to what’s happening with individual company shares. I try to look at market trends. Here, we can clearly see a downward trend or correction. It’s not clear yet. However, it’s clear how the narrative surrounding software development is influencing investors. This doesn’t necessarily mean imminent company failure or mass layoffs. However, it is a powerful indicator. Investors are voting with their wallets, selling shares in companies whose revenue will be drained—or at least, those they say it is. In this case, it will be drained to energy suppliers, graphics cards, servers, and, to a lesser extent, companies like OpenAI, Anthropic, or ElevenLabs.

I’ll also touch on the profitability of the companies mentioned above. OpenAI predicts a $14 billion loss in 2026 in its own forecast.

Anthropic is still not profitable and won’t be until at least 2028. The article doesn’t provide specific numbers, but it’s clear that it’s still a long way from being profitable.

ElevenLabs, a company with Polish roots (founded by Poles), seems to be in a slightly better position. The press materials don’t disclose profitability figures, but the article clearly indicates an $11 billion valuation in the next financing round. However, I expect that after the IPO, we’ll learn that of these three players, they are the ones performing the best.

However, these are providers of models and services directly related to them. They own the IP, have people, control the infrastructure, and have investor money. At this level, however, the prices these providers offer may be too low to further develop their products without external financing.

Andrew Ng recently said that the problems LLMs solve have been solved only through scaling for several years. Since the publication, we have been relying on the same family of solutions (architecture). The technology itself doesn’t make leaps; it’s just the result of the increasing number of hardware and nodes processing massive amounts of data. Thanks to the transformer architecture, we can scale and increase the accuracy of models at a rate resembling a logarithmic function, without a clear asymptote (they look like an increasing arc) but also without any significant leaps.

BTW, I recommend his lectures, they are great if we want to introduce ourselves to the world of ML.

Returning to the topic, at some point, if the business model doesn’t change, the largest vendors will have to raise prices, and therefore their solutions will still be cheaper than human labor, but the difference won’t be that significant (x20, x30, etc.). At that point, it may no longer be profitable for the company to develop software in-house.

The risk incurred by developing solutions in-house can overwhelm existing SaaS customers. Furthermore, if a company is agile, it will require a significant amount of change. If it doesn’t find a methodology that truly allows for software development without external companies, it will be forced to use SaaS. The diversity of these companies must also be considered. Small SaaS solutions for simple calculations, analysis, and so on can be easily replaced, while large ERP, SAP, and generally enterprise software solutions may be inconvenient for SaaS customers.

The golden rule seems to be hiring a one-man army, who with extensive knowledge and AI agents can work wonders. Unfortunately, this isn’t great news for people with narrow specializations. More and more small organizations are talking about looking for so-called generalists or AI-driven developers. For now, these are just market rumors. Offers for traditional developers are still circulating on job boards. The processes are still ongoing. I myself recently participated in them. It seems something is brewing, but for now, the market is operating as usual.

I need to address the issue of social media here. YouTube, LinkedIn, Instagram, Facebook. All these portals are flooded with posts about AI and automation. There are even more training courses on AI programming, automation, and the use of LLMs for various purposes, both in life and in the workplace. Now I’m wondering: how can you train on something that was only created two years ago, and some tools change overnight? What value does such training bring? Who buys these courses? I’ve noticed a trend where well-known people are using their five minutes of free time by tapping into the keyword “AI.” It’s like: everyone’s talking about it, so let’s do a training course about it. I’ll stop here because I don’t want this post to get any longer.

Employment Research

Research indicates that AI is impacting the labor market in the US, Europe, and the UK by automating routine tasks while creating new opportunities in technology sectors. In the US, there has been a decline in office job offers by approximately 17-27% in non-technology industries, but a 68% increase in AI jobs. In Europe and the UK, employees express mixed sentiment—around 34% see improved working conditions thanks to AI, although many fear the loss of junior positions. I’ll now break this down into three regions, as they differ in both labor law and market conditions.

USA

Employee productivity has increased by 7.2% since ChatGPT’s 2022 debut, fueled by AI, as confirmed by a February 2026 report. Davos 2026 research highlights the lack of mass unemployment, despite forecasts of half of junior positions being eliminated. MIT estimates potential savings of $1.2 trillion, with 11.7-27% of the workforce exposed, according to sources.

Europe and UK

Trends similar to the US: job offers with “AI agents” have increased by 1587% according to Randstad (January 2026), with Generation Z the most concerned. In Poland and Europe, AI is transforming white-collar roles, with concerns about 20% of office workers needing retraining. The UK, as part of the data, is seeing growth in tech and finance, but a reduction in routine positions.

For comparison, let’s make a table that Perplexity prepared for me:

| Aspect | USA | Europe/UK |

|---|---|---|

| Increased productivity | +7,2% since 2022 pb | Dependent on AI adoption itwiz |

| New AI roles | High demand for specialists xpert | +1587% “AI Specialist” offers itwiz |

| Risk of layoffs | Junior roles (up to 50%) euronews | Office (approx. 20%) tvn24 |

As we can see, productivity growth is a fact. The role of junior and repetitive office work, known as “paper shuffling,” is also being minimized. There’s a huge hype surrounding agent systems in Europe. In the general labor market, fluctuations are evident, but this hasn’t yet been reflected in unemployment rates.

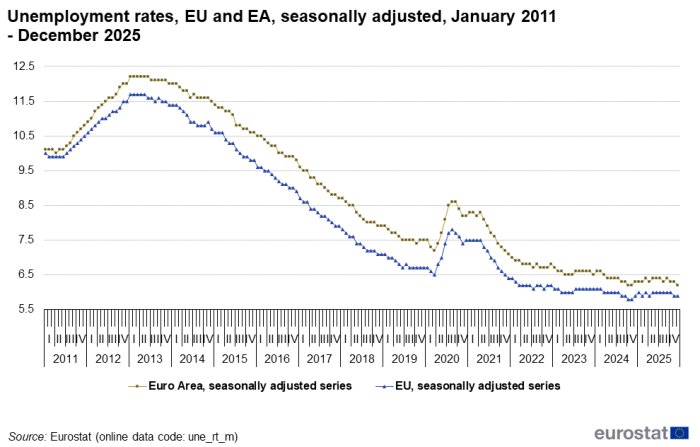

There are slight increases in the US, but I wouldn’t attribute this solely to the use of AI. The chart shows normal, gradual increases of around 1 percentage point. This isn’t happening yet. It’s more about psychology and sentiment than actual job cuts. In Europe, on the other hand, we’ve seen a steady decline in the unemployment rate for years, to levels around 10% and around 6% in December 2025.

Source: Eurostat

This is global data, but we’ll focus on the IT market. Let’s first take a look at the list of layoffs in IT-related companies. Reuters recently published a very informative article. It describes the number of jobs lost. The most disturbing thing is the layoff of 16,000 people around the world.

Only two companies clearly declared that the layoffs were caused to some extent by the use of AI:

| Month | Company | Amount | % of Total Workforce | Notes |

| January | Angi (ANGI.O) | Roughly 350 | Unknown | AI-driven efficiency improvements |

January | Pinterest (PINS.N) | Less than 780 | Less than 15% | Reallocating resources to artificial intelligence-focused roles and strategy |

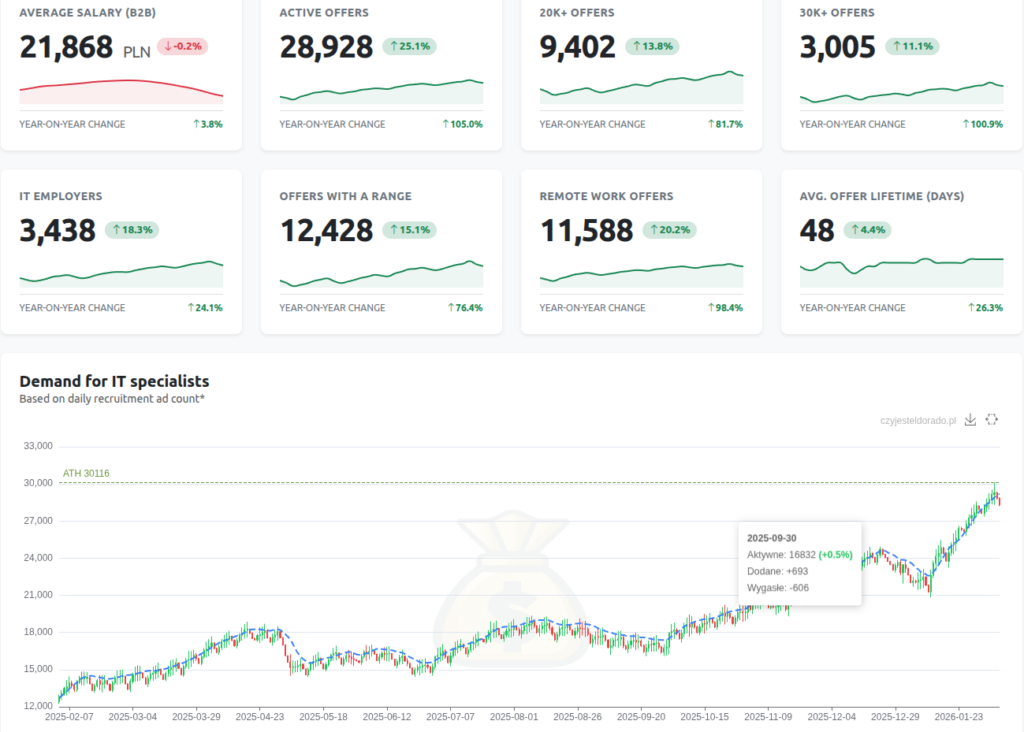

When it comes to IT offers, an excellent website for monitoring the IT market (especially in Poland) is: https://czyjesteldorado.pl/raport-rynku-pracy-it, where you will find a report from February 9, 2026.

We’ve seen steady increases since the beginning of 2025. The steady increase continues, with rates remaining stable. There doesn’t seem to be much cause for concern.

In the US, job offers are clearly shifting towards ML/AI. Publication clearly indicates that the IT job market is no longer the “automatically hot” it once was, but it still offers solid growth and significant opportunities for those with the right specialized skills, particularly in AI, data science, and cybersecurity. Continuous acquisition of new skills is key, as companies struggle to find qualified employees. The number of job offers has significantly decreased (around -36%) compared to previous years.

Similar to the Polish market (5% of offers are for juniors), beginners struggle to find work after or during their graduation from universities or private courses. On the other hand, 2025 was a year full of mass layoffs, which slightly slowed down the job market.

Comparing the data, the US tech market is still looking for talent, but technical skills are key to getting through the slowdown. In Poland, on the other hand, the market is active and stable, with a large number of job offers and good salary ranges, but the pace of salary growth and the recruitment boom no longer resemble the “Eldorado” of years past.

But is this a result of the AI revolution? All indications are that demand for specific specializations fluctuates between them, but the market, both locally and globally, is not entirely inaccessible to a skilled specialist. Beginners are in a worse position, and AI may have some impact here.

Summary and my conclusions

Since this post turned out to be much longer than I expected, I will try to summarize it efficiently and draw the right conclusions. AI is truly changing the way software is developed, but it hasn’t killed the IT market. At least not in 2026. Instead of a “here and now” revolution, we have a slow, uneven transformation in which winners and losers are clearly distinguished.

Below are the 8 most important conclusions from the above considerations and data.

Productivity is growing, but not linearly

- Over 60–65% of programmers declare increased productivity thanks to AI.

- The profit is mainly related to writing code, research, and learning – not the entire engineering process.

- Design, architecture, domain modeling, and business decisions still require humans.

AI is a tool, not an employee

- There are no credible case studies of mass replacement of engineering teams by AI.

- Most often, repetitive and supporting roles (support, back-office) are reduced, not core engineering.

- Companies that tried to “cut corners” (e.g. Klarna) ended up getting hurt.

AI’s biggest problem isn’t a lack of power, but a lack of methodology.

- Hallucinations, “almost good” solutions, and difficult debugging are major sources of frustration.

- Ad-hoc prompting does not scale in real projects.

- The market is naturally moving towards Spec / Structure / Methodology Driven Development

Vibe-coding is a niche, not a standard

- Only about 25% of respondents use agents fully “without writing code”.

- Hybrid work models dominate: human + AI, not AI instead of humans.

Juniors and narrow specializations are most at risk

- Entering the industry is more difficult today than it was 5-10 years ago.

- AI raises the “minimum threshold of competence.”

- There is a growing demand for generalists, systems engineers and people who connect technology with the business context.

The IT job market hasn’t collapsed – its shape has changed

- In Poland: stable number of offers, reasonable ranges, no panic.

- In the US: shifting the focus towards AI/ML/Data, less “classic CRUD” dev.

- Decrease in offers ≠ mass unemployment – this is more of a correction after years of overheating.

SaaSs are not dying, but they have to defend themselves

- Investors are skeptical, sentiment is weak.

- Small, simple SaaS are potentially replaceable by in-house + AI.

- Large systems (ERP, enterprise, compliance, mission-critical) still have a huge advantage.

The narrative on social media is much more radical than reality

- AI-hype is used to sell courses, training and outreach.

- The real market moves slower, more cautiously and much more pragmatically.

My point of view

I hope the above summary adequately summarizes the content of the article. However, I’d like to add a few of my own thoughts. The game is multidimensional – its image is neither two-dimensional nor even five-dimensional. There are many players in the market: different company segments, diverse goals of market actors, investors, and the speculative bubble. So much is happening at once, and it’s difficult to draw simple, clear-cut conclusions.

From my perspective – as someone who actively participates in the market, who has recently been intensively looking for new challenges and taking part in many recruitment processes in parallel – two things caught my eye the most.

First, very strict technical requirements are set during technical interviews. The market no longer rewards narrow competencies or the “safe middle class.” What’s expected is genuine independence, a comprehensive understanding of systems, and responsibility for technical decisions.

Secondly, there’s considerable uncertainty in the approach to AI. Some companies proudly speak of their active use of tools like Claude Code or coding agents. Others, however, limit themselves to statements like, “We’re using AI, but we don’t yet have clear boundaries or methodologies.” This is a clear signal that the market is in a transitional phase – tool adoption has outpaced process maturity.

Additionally, there’s significant uncertainty surrounding the approach to AI. It’s becoming increasingly clear that the market is becoming more challenging and requires versatility. In my opinion, this will lead to a model in which companies prefer to employ a few very strong, cross-functional units rather than maintaining extensive teams that struggle to coordinate their work.

This is how I see the job market in a few years:

- strong focus on AI/ML,

- a natural connection with the areas of Big Data and Cloud,

- high value of people combining several specializations at the same time.

My vision is that companies will gradually reduce their reliance on external software development services. Instead, they will acquire top talent from the market – people capable of independently designing, implementing, and developing systems, often acting as “managers unto themselves,” working directly with the product manager or owner.

This isn’t good news for everyone. However, it is very good news for those who can combine technology, business context, and the conscious use of AI as a tool, not a prosthesis.

I wish all readers a lot of enthusiasm for learning, because it will certainly come in handy, and the only recipe for career success is: creating your own products or quickly adapting to the surrounding IT employment market. I’d love to read your thoughts and opinions on this topic.

Thanks and see you next time!